By Tamara Straus

Temina Madon is executive director of UC Berkeley’s Center for Effective Global Action (CEGA), whose mission is to improve lives through innovative research that drives effective policy and development programming. Madon also serves as the managing director of UC Berkeley’s Development Impact Lab, which CEGA and the Blum Center co-administer. She has worked as a science policy advisor for the Fogarty International Center of the National Institutes of Health and as a Science and Technology Policy Fellow for the U.S. Senate Committee on Health, Education, Labor and Pensions. She holds a PhD from UC Berkeley in visual neuroscience and a BS in chemical engineering and biomedical engineering from MIT. The Blum Center sat down with Madon to gather her views on impact evaluation, technology for development, and the changing intersections of development economics, engineering, and social science.

Temina Madon is executive director of UC Berkeley’s Center for Effective Global Action (CEGA), whose mission is to improve lives through innovative research that drives effective policy and development programming. Madon also serves as the managing director of UC Berkeley’s Development Impact Lab, which CEGA and the Blum Center co-administer. She has worked as a science policy advisor for the Fogarty International Center of the National Institutes of Health and as a Science and Technology Policy Fellow for the U.S. Senate Committee on Health, Education, Labor and Pensions. She holds a PhD from UC Berkeley in visual neuroscience and a BS in chemical engineering and biomedical engineering from MIT. The Blum Center sat down with Madon to gather her views on impact evaluation, technology for development, and the changing intersections of development economics, engineering, and social science.

Impact evaluation has become extremely important to the field of international development, but many complain that funding is still difficult to come by. What do you think about this disconnect?

Temina Madon: William Savedoff of the Center for Global Development and Ruth Levine from the Hewlett Foundation recently wrote that approximately $130 billion is spent each year in official overseas development assistance, compared to about $50 million for evidence—i.e., for rigorous evaluation of programs. That’s less than one percent. Would any private sector company that is serious about its products spend that little on R&D? If you look at Google, Intel, and the companies that deliver the services we use everyday to enhance productivity, they are spending 15 to 20 percent of their revenue on product development. I’m not saying that government operates in the same way as the private sector, nor should it. But you would think that for the delivery of essential public services that safeguard people’s health, livelihoods, and basic security, more than 1 percent would be spent on product development and evaluation.

What would it take to get the pace of investment in evidence to where it should be?

Temina Madon: I think it would take a wholesale shift in the attitude of governments away from process and consensus, toward performance—toward delivery of services that actually improve people’s health, their security, and their workforce readiness and education. Bureaucrats need to be incentivized to deliver outcomes, not programs.

What do you say to people who don’t understand impact evaluation or the cost of rigorous program evaluation?

Temina Madon: First, some programs are actually harmful, just as some drugs tested in clinical trials prove to be harmful. For example, there are programs that try to put money and empowerment into the hands of women, but sometimes they can drive negative outcomes, related to domestic violence and inter-household conflict. These kinds of programs, while very much intending to empower women, can put them at greater risk, if not implemented appropriately. You want research, evidence, and careful design of services to ensure they are implemented in ways that do no harm.

There are also many programs that have no impact. So there is an imperative to allocate money to high performance programs. We like to say that the public has, in some ways, a relatively unenforceable contract with government. We vote for politicians—essentially entering into a contract with our leaders to deliver services that keep our society functioning. But that contract cannot be enforced on a regular basis, because we vote only once in four to five years. A good government, ideally, is accountable day-to-day for the performance of public services. So it is a breach of contract when elected officials allocate resources to harmful or low-impact programs. If you have limited resources, you are supposed to invest in proven, evidence-based policies or programs. This is how you fulfill your contract with the people. Of course, we need a way to identify the highest performing programs—and that’s where rigorous evaluation is needed.

To what extent can international development efforts in the U.S. be separated from U.S. foreign policy interests?

Temina Madon: I honestly don’t know the answer to this question. But I know that USAID has tried to pivot under the current administration, away from implementing programs that are “business as usual” and more toward knowledge generation. In other words, how do we contribute knowledge that helps governments in poor countries perform more efficiently? Knowledge can be in the form of scientific advances and technologies, evidence that governments can use, and even capacity building. It is a pivot away from providing commodities for consumption, like surplus grain (during famine) or condoms to prevent HIV. We are starting to equip governments and communities to increase their resilience, improve their public service delivery, and adopt new technology. I don’t know if you can ever separate foreign assistance from foreign policy objectives. USAID reports to the Department of State. But under this administration, there has been a movement toward more knowledge sharing, technology transfer, technology development, and investment in product and service design.

Is that the right way to go? Intrinsically, as an American citizen, I prefer that we give the best that our country has to offer. U.S. overseas assistance should not be a channel for dumping surplus. As a citizen, I’d prefer that we contribute our innovation, our knowledge and management skills. We have this rich human capital; I love the idea of helping Americans to do good in the world, by connecting with opportunities to transform economies.

CEGA is among a handful of university centers experimenting with wireless sensors, mobile data, and analytics to evaluate poverty-alleviating programs. Do you do see a time when one or more of these approaches will become primary or replace randomized control trials in impact evaluation?

Temina Madon: We see these innovations as tools for measurement in impact evaluation. Rigorous evaluations just expose the causal links between programs and outcomes. We’ll always need this approach. But how you measure your outcomes, in large part, determines the cost of an impact evaluation.

Often, evaluations are expensive, because you are doing thousands of pen-and-paper surveys. You may have to hire hundreds of enumerators to carry out two-to-three hour interviews with representative households across multiple villages, districts, and regions. You have to pay fuel and housing costs for the enumerators when they travel. If you’re measuring biometric outcomes, your surveyors need to be well trained. They may even need to be certified health workers; it depends on the tests you’re administering. You may have to pay for the rapid transport of diagnostic tests to a laboratory. This is where the high cost comes from.

If we can shift toward mobile phone surveys and wireless sensors, the costs could drop dramatically. We just need to know that newer methods are as reliable and accurate as door-to-door surveys.

Again, impact evaluations just compare the outcomes—of people, or communities, or markets—in the presence and absence of a program. But they’re also a way to give a voice to poor and vulnerable households, to allow them to express whether or not a program has worked for them. If we capture this input using sensors, mobile technology, satellites—it is still impact evaluation. We’ll still be looking for causal link between the intervention and its impact, but the technologies reduce the cost of gathering the data. They make it easier to understand the lives, outcomes, and aspirations of the people we care about.

Give me an example from either UC Berkeley or another university of where new technologies are effectively helping to evaluate development programs?

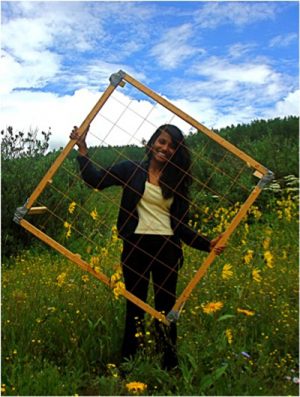

Temina Madon: One of the projects supported by the Development Impact Lab (DIL) is trying to expose use of improved cookstoves by women in Ethiopia. The stoves reduce biomass consumption and, with it, health risks from pollution. The question is what kind of educational or marketing interventions could get women to use the stoves. This project started with simple pen-and-paper interviews with women to ask about their stove use—a method that is costly and quite unreliable and biased. If you ask me whether I use your excellent new stove, I’ll probably say, “Yes!”

DIL supported a group of researchers to put temperature sensors on the stoves, in order to gather reliable, high frequency data on stove use. Sensors could lower the cost of evaluation significantly, given the amount of data and the quality of data being captured. The team started to use tablet-based surveys running ODK (Open Data Kit), and then benchmarked their survey data against the sensors, to understand the reliability of each approach. There is an upfront cost for benchmarking a new technology, and you are going to see that with all these emerging measurement technologies.

CEGA is funding another project that is looking at crop yields among smallholder farmers in Kenya and Uganda. Currently, when you send enumerators out to measure crop yields in a region, you take a random strip of a farm plot, cut or harvest the produce in that strip, and then estimate what the rest of the plot will yield. This is incredibly costly. We are trying to do that now with microsatellite data. Very soon, microsatellite operators expect to get optical images, updated daily, of landmass for practically the entire planet. We think we can infer crop yields on very small plots using this high resolution, high frequency data that give time slices throughout the harvest cycle. Crop yields in the context of agriculture are something development economists really care about, because we think they generate increases in income for poor households.

Another new measurement approach is crowdsourcing. Premise is a tech company in San Francisco that CEGA is working with. Premise maintains a network of community members equipped with smartphones, all over the world. The company pushes customized tasks to the smartphone user, such as: identify where there is scarcity in consumable water in your neighborhood; or take a picture of houses that lack connection to the grid within 2 kilometers of your house. Premise gets the images, which are location- and time-stamped, and pays the contributors for every accepted observation. Premise is then able to produce real-time aggregate information. They can create consumer price indexes by capturing, for example, food prices from 60 markets across a district. To spur development, we can then intervene in half of those markets, and see how market prices change. This is an impact evaluation—a randomized trial control trial. But instead of sending enumerators out to measure outcomes, we are doing low-cost data collection using a new technology.

This is why we see the measurement agenda as essential, but also complementary, to surveys. You’re not ever going to want to get rid of surveys wholesale, because even if they introduce bias, you’re gaining beneficiaries’ perceptions. What beneficiaries tell you may not be the actual reflection of their behavior—but you may want to know what they think and say. Also, with satellites and sensors, you may not be able to capture as much information as you would with a survey. So we see these technologies as complementary and as tools that could be embedded within an impact evaluation.

What is the skill set for people going into economic development?

Temina Madon: The bottom line is that very few people have all the skills needed, and this is why we are taking a “team science” approach. We are helping to create a new discipline, development engineering. In this new field, you can’t be an economist working alone. You might need a computer scientist, an electrical engineer, a mechanical engineer, and other social scientists to work with you on the measurement component of your research. There is a fertile territory for measurement advances that engineers can contribute to and get credit for, because real innovation is required to sense physical properties or to observe trends in the field.

There are a few people who have been able to cross disciplines. In fact, this is where we got the idea for the development engineering as a PhD minor. Joshua Blumenstock was a Cal PhD student in computer science who is now an assistant professor at University of Washington. He got his PhD in the Information School, but he also did a master’s in development economics. He took the core econ courses, because he was interested. So he has the engineering insights to build systems for “big data” analytics, but he also understands econometrics and he can tease out the causal linkages between interventions and their impacts. He can leverage new measurement tools, like the call detail records (CDRs) that are generated by mobile network operators. Right now, there is another UC Berkeley student in the I-School, Robert On, who is building this same hybrid skill set.

What other skills might students gain if development engineering were to blossom?

Temina Madon: There are a lot of people with a lot of opinions on this question. But the whole idea for “development engineering” came up in a discussion with Eric Brewer and Ted Miguel. We were saying: Josh Blumenstock has these unique abilities, and can tackle unique problems, because of the methods he has been exposed to. How could we create more students like him, who will know how to work with someone from a different academic tradition? We started thinking that the obvious first step is knowing what you don’t know—understanding enough development and economic theory to know that there is a body of statistical and data collection techniques that you could tap into, through collaboration. That is one piece.

The other piece is knowing how to do fieldwork. Eric Brewer’s concern was that while engineers have become “interventionists” in the ICTD [information and communication technologies for development] community, they don’t understand how to access representative, population-level data to inform their work. They are doing focus groups. But when your technology’s design is informed by a small number of focus groups, the technology is designed for that population. It’s not necessarily scalable, or representative of something that could scale. So we need to teach people how to do field experiments, impact evaluations. We need to teach about the political economy of technology, the regulatory institutions that play a role in scale-up, and how to bundle those into the design of a technology intervention.

The flip side is that for an economist, you need to know the body of technologies that exist. You may not have the modeling or hardware skills to build sensors, deploy a network, and then analyze the data. But you should at least know about the toolkit that is emerging, so that you can think more creatively about measuring outcomes in the field. So again, knowledge about new approaches outside your own discipline is so important.

What do you make of the conversations in the popular press about women in science, both in higher education and industry?

Temina Madon: I have been fortunate to grow up in a household where women are expected to be scientists and engineers. Yet I did not become a faculty member, because I did not have that desire in the discipline in which I was trained: visual neuroscience. A lot of people say women tend to seek work that has more social impact or social relevance. I don’t know if that is true or not. But it is true for me, personally. It was a big pivot in my career to move toward work that was more applied. I think “development engineering” gives an avenue for women in quantitative sciences and engineering—fields where they tend to be underrepresented—to find social impact in their research. We think development engineering is promising in that regard. But honestly, if you look at economics and engineering, the lack female role models at the level of faculty is a pervasive problem. It is even harder for women in developing countries. A lot of our collaborators in developing countries are male, because those are the people who have risen in their universities. We have very few female research partners in developing countries.

Can you tell me a little more about your upbringing? Did you come from academics?

Temina Madon: I grew up in California. My parents are chemists; my mom ended up going into medicine, but my dad was in the “biotech revolution.” It is funny to me that I ended up working with a bunch of social scientists. CEGA is interdisciplinary, but 40 percent of our affiliates are economists and social scientists. We say we are trying to be a Bell Labs for development. I find it strange that I have migrated toward social sciences, because my family is biased towards science and engineering. My grandfather was an electronics engineer. He is Indian, and worked after Independence on building India’s engineering capacity, bringing radar into the country. He initially started in the military, and then went into the energy sector, but he was constantly pushing tech transfer, seeing the transformation of the country through its adoption of technology and infrastructure. He brought a real focus on technology as a driver of development into our family’s values.

Neuroscience and mental health are among your areas of expertise. Would you talk about your interest in those disciplines?

Temina Madon: My background was in neuroscience and that was an intellectual interest. When I was a grad student, my partner died from suicide—and that, in large ways, pivoted me toward mental health policy. I used my background in neuroscience to understand and explore the biological mechanisms responsible for depression, bipolar disorder, suicidality. I looked at the prevention tools we have. It turns out, there are very few. There is relatively little rigorous public health research on mental illness prevention, or even treatment. There are very few randomized trials. There is a lot of observational work and demonstration projects, but there is very little evaluation of community interventions to prevent suicide, or even innovation in how to measure suicide.

What trends in your field make you most hopeful?

Temina Madon: In his annual letter two years ago, Bill Gates talked about measurement. He said you can’t fix something until you have measured the problem; and you can’t even perceive something as a problem or an opportunity until you’ve measured it. I, too, am excited about the measurement agenda. I feel like so many things in our lives today are instrumented—from the web browsers we use, to our mobile phones, the GPS on our phones, the Fitbits we are wearing, and the sensors in our cars and homes. There is so much being measured, and it has created such a rich data environment. Yet much of the rest of the world is still invisible. There is very little measurement in poor communities. If you can’t record someone’s existence, if you can’t capture their life and death, their aspirations or communities’ needs—then you can’t know how to approach shared challenges with ingenuity, innovation, and intervention.

I feel like there will be a real blossoming in economic and social development with better ability to measure outcomes. But again, benchmarking is going to be important. If you are going to use new kinds of information, measurement, and data for policy intervention or program design, or to monitor and keep governments accountable, there is a lot of work to prove to ourselves that our new measurement tools are as reliable as the current gold standard.